Vanilla GAN

Vanilla GAN

Model

Using nn.Linear in Model and to limit values 0~1, using sigmoid and tanh function (+ denorm)

Discriminator = nn.Sequential(

nn.Linear(image_size, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, 1),

nn.Sigmoid(),

)

Generator = nn.Sequential(

nn.Linear(latent_size, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.BatchNorm1d(256),

nn.ReLU(),

nn.Linear(256, image_size),

nn.Tanh()

)

Optimizer

Using Adam Optimizer

self.G_optimizer =

torch.optim.Adam(self.G.parameters(), self.g_lr)

self.D_optimizer =

torch.optim.Adam(self.D.parameters(), self.d_lr)

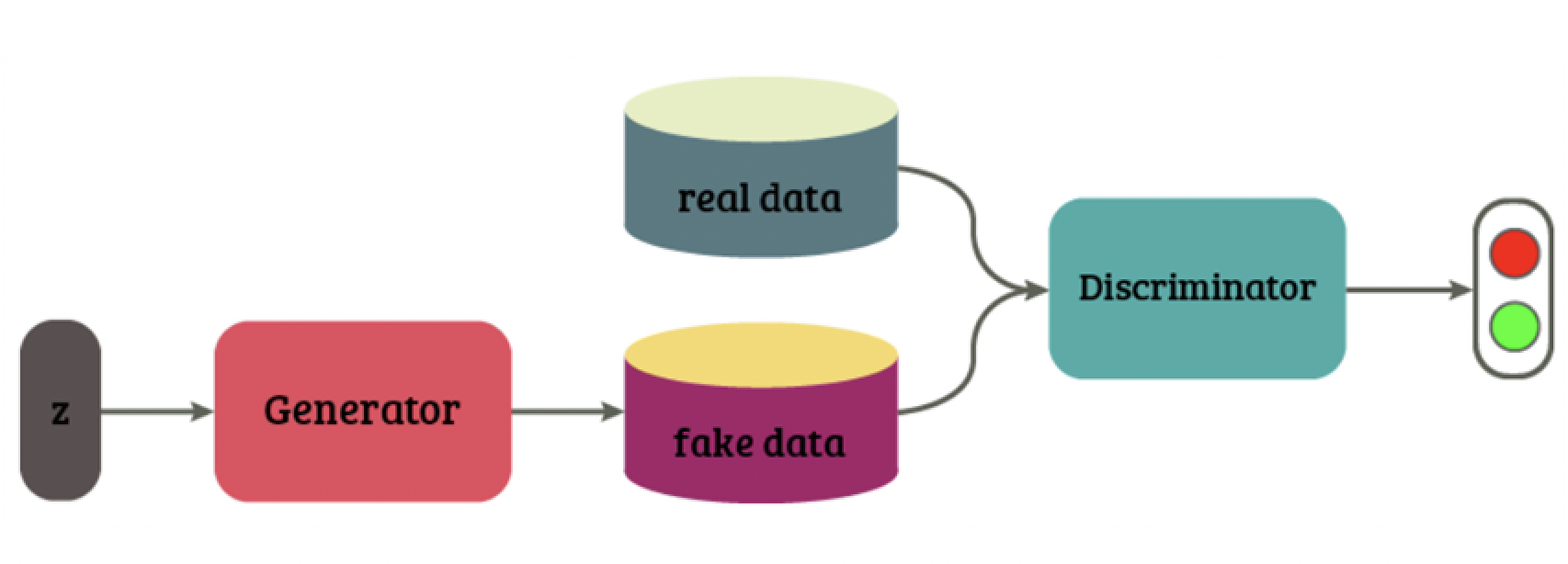

Loss Function

Discriminator learns to decide D(x)->1, D(G(z))->0

outputs = self.D(images)

d_loss_real = self.criterion(outputs, real_labels)

outputs = self.D(self.G(z))

d_loss_fake = self.criterion(outputs, fake_labels)

d_loss = d_loss_real + d_loss_fake

Generator learns to decide D(G(z))->1

outputs = self.D(self.G(z))

g_loss = self.criterion(outputs, real_labels)

Results

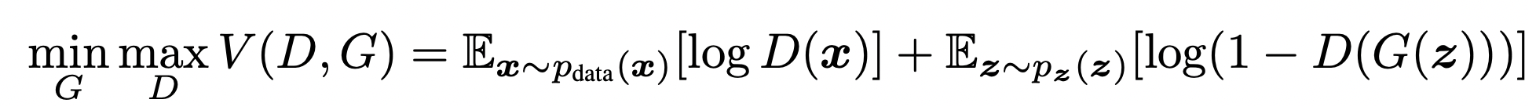

Jensen-Shenen Divergence

$V(G, D) = E_ {x \sim p_ {data} (x)} [ \log(D(x)) ] + E_ {z \sim p_ {z} (z)} [ \log(1-D(G(z))) ]$